Coders of the Carribean post-mortem

Table of contents

Introduction

When I got addicted to CodinGame (CG) almost a year ago now, I quickly had a single objective in mind: win a contest. It’s hard to accurately describe how it feels to finally accomplish that goal after putting so much time and effort towards it, while learning and having fun during the journey most importantly! It was all worth it and reminded me of how and why my passion for coding started, and for that I am very thankful to CG for providing such a platform.

So it is perhaps why I got a little carried away writing this article. While I typically trim a lot of what I write after the fact, I chose to skip it this time around to publish it faster, and also because stuff I am afraid is boring to read or going too much in details might perhaps be interesting for some others.

This post-mortem will describe the strategy of my final submission, but also about how I approached this contest, the ups and downs as the week progressed, and briefly touch on the way I work at it.

I hope you will enjoy reading (and my apologies for that) the massive wall of text below, and maybe even learn a thing or two from it!

Game recap

Not everyone reading this will have participated in the contest, so here is a simplified recap of the game rules loosely inspired from the official statement. At the risk of offending readers with a nautical background, I took the liberty of replacing the PORT and STARBOARD commands with turning LEFT or RIGHT, as I find it easier to understand.

In this game, you are in command of pirate ships and your goal is to avoid running out of rum. If all the rum dries up on a given ship, your crew will go mad and you will lose the ship.

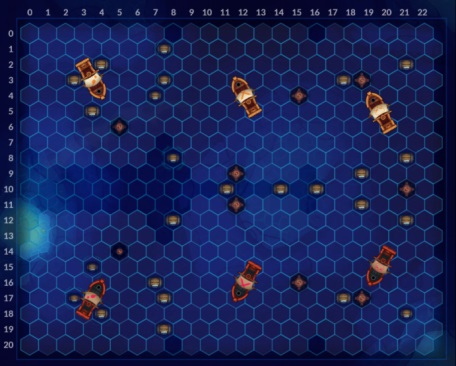

The game is played on a hexagonal grid 23 cells wide and 21 high.

Both players have between one and three ships. Every ship is 3 cells long and 1 cell wide.

Each turn, the players can give one command per ship: go

FASTERorSLOWER, turnLEFTorRIGHT,FIREa cannonball at a target position, drop aMINEbehind the ship or simplyWAIT. Cannonballs will take between 1 and 4 turns to land depending on the traveled distance.On the grid you will find barrels of rum containing various amounts measured in units, and can be collected by a ship when touching it. Each turn, a ship consumes 1 unit of rum on board.

A ship hitting a mine or hit by a cannonball will be damaged, and more importantly lose units of rum.

The game ends when one player loses all their ships by running out of rum.

Workflow

This is my 5th contest at CG, and every one left me with a nagging thought: “I wasted too much time, I need a better workflow”. It’s the first time now I don’t feel that way nearly as much, which is great because less time wasted means more time spent writing a better AI.

Tools

This is stuff I wrote over the last months, which proved to be useful over time.

C++ packager: I got sick of maintaining a huge cpp file every contest, and copy/pasting between them, so I wrote a very simple preprocessor which packages multiple files into one for CG. This allowed me to write plenty of useful bits of code to be included in any CG game, and possibly further split my codebase between simulation and AI for instance. Personally I tend to think much better with less code around to focus on.

#include "stdcg.h"

#include "timeout.h"

#include "bitstream.h"

#include "array.h"

#include "point.h"

#include "grid.h"

//...Replay downloader: A program to download the JSON files used to store replays on CG. The main use case is for producing a crude but practical suite of nearly exhaustive unit tests for the simulator code at a very low cost. Replaying thousands of matches and validating the expected result turns a simulator cannonproof very quickly, making optimizations and refactors very safe.

Replay analyzer: The very same replays can also be parsed to produce detailed statistics of win ratio according to players, game conditions and bot version. For instance, this is how I later quickly found out my bot was very strong in 1v1 but weak in multi-ship scenarios, and allowed me to focus on what to improve and against whom. It’s also great for spotting regressions.

Distributed simulations: This may be overkill, but it was still a lot of fun to make and a learning exercise all the same. Having a setup to play your bot against itself using different configurations can be immensely useful as it was in Fantastic Bits, but time consuming. So I wrote a small client/server solution to distribute games to play over network to the few PC/laptops I had at my disposal to get much faster results. Local benchmarking did not help me as much on this contest, but it was still good to have inspiration on tweaks that seem to work better.

Code

State serializer: A memory stream used to write every piece of information about the current state. It is then encoded in base64 and written to stderr at the beginning of each frame. When a bug, crash or weirdness occurs, the scenario can be debugged comfortably by just copy/pasting that string in a local IDE (I use Visual Studio). It’s a real life saver.

Handmade structures: It is hard for me to profile what’s really running on the servers, as I am not a Linux person, so I prefer having peace of mind by knowing exactly what happens when using a vector, map, grid, etc. It also has the benefit of easily adding traps when indexing is out of bounds or other stuff that would result in memory corruption or overflow, which are much harder to debug after the fact. Using a #define before submitting or benchmarking removes all those traps for best performance.

Profile early, profile often: You never know when innocent looking code can end up smashing performance faster than pirates gulping down a barrel of rum. Writing performance information in stderr after every frame is key, and when a frame looks bad, its serialization string is available to profile it locally.

Log everything: I log the search, log the details of the evaluation function on the current frame, log as much as possible to gain insight on what’s going on when things go wrong (and they will). If performance becomes an issue, I use #defines or templates to enable them on demand:

template <bool LOG = false> int evalScore(const State& state)

{

// This will be omitted when the function is not explicitly templated.

if (LOG) // cerr << ...

}Source control: If you don’t already, just use git. You’ll be thankful when you want to remember what changed between versions, or revert stuff that just doesn’t work.

Contest strategy

First steps

Nowadays I always start contests with the same routine. I first write throwaway code to get to bronze league using the least effort possible. In this case, for every ship the algorithm was:

- Go to nearest enemy ship

- If possible, fire at the position it’s going to be when the cannonball lands if the ship just

WAITs. - If not, fire at a mine. Make the sea safer for everyone, but mostly for me.

- If low on health, go to the nearest barrel of rum instead.

It could have worked with less, but it was easy enough regardless.

Afterwards I wrote a simulator able to advance the game state by one frame using as input a structure containing moves for all players. Using the unit tests (as described in the tools above) spotted all the bugs immediately and resulted in a quick and 100% accurate simulator within a few hours. At this point, having the referree code available is really just the cherry on top.

For the state structure, I used static arrays for ships, barrels, mines and cannonballs, along with a 8 bit grid to store in each cell: the entity type in the upper 2 bits and its index in the lower 6 bits (hoping no one goes crazy with mines). I needed to distinguish between ship front/middle/back as well, so I just snuck that in the middle unused bits since there are only 6 ships at most. No space wasted! Everything was stored in axial coordinates internally since it makes many operations simpler.

Once that was done, in order to get an easy feel of how everything is working out, I added a simple weighted Monte Carlo search, with an evaluation function leading the AI to perform the same simple behavior as before, only with lookahead and manual steering. This gave me second place after the first day, so not too shabby.

Mid contest

I took some time off after that (it being Easter week-end and all), but coming back to the contest afterwards, I found I was running out of ideas quicker than anticipated. I modified the algorithm to become evolutionary in a similar way to what I did in the Fantastic Bits contest, but only met mitigated success for this game.

At the same time, everyone’s bot had been improving meanwhile, so firing at them was no longer as effective as they now knew how to dodge, and mines seemed to be a pointless distraction as clearing or dodging them was easy. Because of that, the metagame was evolving towards being greedy with barrels: take them as late as possible, maintain a ship with highest rum and win the attrition war while dodging dumb fire. Royale’s bot made an impressive display of that early on, achieving top 3 without using mines or cannonballs at all.

Watching more replays of my bot, I could not help having a strong impression: this game sucks (according to my tastes). Why even have offensive options if playing defensively is so effective? Passive play is too often boring to watch and code. But at some point, analyzing a match caught my attention on something. I forgot exactly what it was exactly or why, but I suddenly realized I was very wrong. This game is actually great and has deceiving depth that I completely missed at first.

It may sound silly, but it was a revelation to me. Hitting ships is the end, not the mean. The game is first and foremost about controlling and leading ships towards vulnerable positions. Because of the way steering works, you can easily find ways to control the area an enemy ship can reach, making it increasingly vulnerable to enemy fire. This is typically accomplished by forcing an enemy ship towards mines or borders, or making the FASTER move impossible without taking damage, or even better, force a SLOWER.

So I drew on paper the possible locations a ship could reach within one, two and three turns, according to initial speed. A few sweet spots to move and fire at became immediately apparent to greatly limit the enemy ship’s options, giving much better chances at controlling it, and possibly even sink it.

It became clear to me that stochastic optimization, better known as “random search”, would not cut it this time. It could potentially miss game winning scenarios where it’s possible to trap a ship to its demise, but requiring a singular, specific move sequence to do so. Or vice versa. Predicting the enemy movement with this model also looked like a painful mess.

I am actually surprised so many contestants had great results going that road. Kudos to them, I couldn’t this time around.

Switching to brute force

Following this thought, I first rewrote the AI to use a brute force search per individual ship instead. The algorithm went like this:

- Take one ship.

- Exhaustively search all sequences of 3 moves ahead, assuming

WAITcommands for other ships. - Keep the best sequence for that ship in a “best plan”, according to the evaluation function (adjusted to player side) on the final state.

- Repeat for the next ship, but instead of assuming

WAITcommands for other ships, use the last best sequence found instead. - When all ships are done, repeat again until time is elapsed. Always use the best sequence found for the other ships when searching the tree for one ship.

FIREcommands used the aforementioned sweet spots.

I also rewrote the evaluation function from scratch to take into account more positional factors, like distance to border, directional proximity of mines, keeping safe distance between allied ships, as well as ship speed (stationary ships sleep with the drowned god). And since I was much more interested in combat at the time, I gave a huge weight to get closer to the front or sides of a random target near the sweet spots, and maintaining sights on it. It was time to get up close and personal.

The initial results were very satisfying to watch compared to barrel wars. My ships were now suicidally insane and somehow still won most of their fights. With a few more tweaks and toning down the bloodlust just a notch, that got me back near the top of the leaderboard, for a bit.

The (brute) force wasn’t with me

There were still many matches leaving me unsatisfied, where ships sailed into obvious traps and failed to engage an enemy properly. My new search algorithm was flawed, it could not handle some of the more interesting, complex and interactive scenarios. Because the opponent’s plan was always known ahead of the search, it would adjust too specifically to it.

For example, imagine two deadly scenarios, one resulting from going LEFT, the other from going RIGHT, but requiring different manoeuvering from the enemy to achieve it. Each time you evaluate, you only see the LEFT or RIGHT scenario from the enemy, and so by avoiding it you fall back in the trap of the other unknown scenario when you try to plan for the enemy again. Catch 22.

To be fair I was expecting that to potentially happen, but hoping it would not be frequent enough in practice to matter much. But it wasn’t the case. A search algorithm of the minimax class clearly works much better in those situations, but it could also have abysmal performance or too limited depth with this game. Not to mention the complexity of multiple ships interaction as well as simultaneous moves.

Let’s have pirates play chess anyway

Luckily, minimax search has been the subject of very extensive research for decades in chess AI programming. The massive amount of available information online could give me a chance at making a really good and speedy implementation in little time. Then perhaps I could find a way to deal with the multiple ships situation later. If anything, I was most interested in not losing single ship confrontations, that was definitely the fun part to me. As for the simultaneous moves issue, I chose to ignore it completely and simply give the knowledge advantage to the enemy, to get a more cautiously optimistic result.

I ended up doing most of the classics: alpha-beta pruning, static and dynamic move ordering, principal variation search combined with iterative deepening, using transposition tables to accelerate the search in deeper plies. I also briefly toyed with aspiration windows but was quickly running out of time. I won’t bother with the details of those concepts, there is wonderful literature out there a Google search away that will explain much better than I could here, and this article is getting long already. They are also not as complex as they sound like, but as always the devil is in the details.

After a lot of testing and optimizing, I submitted my first working minimax variant late on Friday with the same evaluation function as before, and climbed from top 15 to top 3. It was now very often searching 4 turns ahead (for all combinations of two ships) with ease, sometimes even 5 or more, instead of barely 3 in brute force.

Saturday’s disaster

I got overexcited with the success of this new search, and kept working on it, improving its performance and breadth of moves searched, handling more scenarios, convinced it could become even better. I also added a few heuristics I wanted to try in the evaluation, getting impatient to see how it would work out.

Then later on, I looked at the games it played with more attention and noticed it wasn’t winning any games vs the top 5 anymore. Oops. I had committed a serious programming sin: making too many changes at once without testing each of them properly. The time pressure was finally getting to my head. So in hindsight, what happened?

First, the search got too good. Remember when I said it was looking for cautious results? Well, the ships finally became aware that enemy ships could potentially fire in a lot of places, actually. Facing the inevitability of death, they cowardly stayed out of range of the enemy and the now drunken crew was too depressed to even bother shooting their cannons anymore, because what gives? They would not hit their target anyway.

On top of that, there were bugs in the search. My transposition table was not remembering alpha-beta cutoffs correctly in some cases. Some other ideas of search improvement, such as reusing the intermediate tree search results from other searches, turned out to have nasty shortcomings I could not possibly fix in time. One critical sign error slipped in a new part of the evaluation function that my log should have made immediately visible, if only I had paid attention to it earlier. Some of the new heuristics were actually terrible in practice, such as giving more importance to your own rum than the enemy’s. It was a mess. And to make it worse, meanwhile I was dropping further down on the leaderboard.

I stopped the crisis by going to bed.

Sunday’s last sprint

There was only one sane option left at this point: keep calm and revert. Thank you, source control.

Using a diff tool, I extracted some of the last changes that looked more promising and having lower risk, isolated each one, and put them on a waiting list. On top of that, by focusing so much on the main aspects of search and evaluation, I was accumulating a growing TODO list of smaller tweaks and ideas to try, so I added them in as well.

At that point, I needed to find anything that could give better results and quickly, time was running out. So as soon as the next change on the list showed no significant improvement, it was immediately discarded to move on to the next. What started showing promise was then submitted soon after, with its results closely monitored.

Here’s some of what made the cut that day for one hell of a last minute climb:

Detecting when the enemy fires by looking for new cannonballs in the input. Because a ship can only fire every 2 turns at most, more optimistic scenarios could be found.

My win ratio was sometimes 25% lower in 2v2 and 3v3. I did a quick fix (hack?) for the multiple ships scenario by evaluating pairs of ships in decreasing distance order, keeping the best sequence found on other ships for the closer pairs. If the ships are too far from each other, it does not search for the enemy’s moves at all, but it still uses its best sequence to maintain good chasing and area control.

The minimax will make use of cannonball

FIREonly if it’s certain it will give better results. But sometimes you have to take chances to better control another ship. So by now, anyWAITcommand returned for the first turn could potentially be changed toFIREduring a post process that was ran after all search was done. It uses the best sequence found for the enemy, replays it, and fires at a location where it could hit that ship during that sequence. The idea is to force the enemy into picking a suboptimal sequence, assuming it originally found the optimal one.Ironically enough, that post process would consider locations one turn too early due to an initial bug, but by the time I noticed, it turned out to be amazing in practice. Fixing it even caused a regression so I left it alone. I didn’t have time to fully analyze why, but I assume it’s because of the higher probability of the enemy being closer to the prediction at lower depths. The wonders of testing…

A simple voronoi diagram made from each player’s ship position was used to calculate barrels “controlled” by a player, and weighted in the evaluation according to their amount of rum. This caused a big improvement in early game planning where controlling rum is so much more important than ships.

I put more emphasis on tweaking positioning in the evaluation as well, giving a bigger penalty when allied ships were too far from each other, and when enemy ships were too far as well. This created more favorable encounters more often, and less scenarios where a stranded sea wolf would

stupidlybravely try to take out three ships on his own.I defined the ship having the most rum for each player as being the ‘flagship’, and therefore made it a very high value target. This caused interesting behaviors where low rum ships suddenly cared less about surviving if they could take out the flagship with them or cause significant damage by blowing themselves up on a mine for example.

Anything else? I forgot by now.

My last submit 4 hours before the deadline sealed the deal when it was finally able to maintain above 60% win rate against the top 3. Mission accomplished.

Conclusion

If you made it this far, congratulations! I hope I did not bore you to death with this sea of details, and that you found reading this article to be interesting.

Thanks to CodinGame for a great contest, to everyone for participating and creating a fun atmosphere in game and in chat, to Agade, pb4 and Royale for a fierce fight at the top with great matches, and to my girlfriend and friends for being awesome and supporting me through this intense competition.

– reCurse signing off, yo ho ho with a barrel of rum!